How “Texture” affects the “What” (Identification) in Images

Understanding Scenes, Objects, Regions of Interest (ROI) and Micro-Patterns

Understanding Scenes, Objects, Regions of Interest (ROI) and Micro-Patterns

Texture analysis addresses the problem of characterizing and quantifying the spatial arrangement and variation of pixel intensities in an image to provide meaningful information beyond simple shape, color or intensity values. This is a key challenge in computer vision and image processing, when enabling various image processing capabilities like Object and Material Identification, Region of Interest Identification, and Texture Characteristics Identification.

Texture is a primary signature for any scene, object, ROI or micro-pattern identification. For many objects, texture isn’t just a property, it is the object. A patch of grass, a gravel path, a wooden surface: strip away their texture, and you strip away their identity. In this blog we discuss:

texture analysis and features to address categories of “what” problems including the high level technical process and key application areas

key considerations when using texture features

transition to implementation aspects using texture descriptors such as LBP, Gabor, GLCM, and Wavelet Transforms

We further complement the discussion with our hands-on playground code (in Python, OpenCV, scikit-learn) for Texture Analysis Kit (TAK) on Google Colab, to aid those wishing to start experimenting quickly.

2.0. Categories of “What” Challenges

There are many ways to group the “what” challenges and categories differ depending on sectors or current and emerging requirements. For simplicity, we kept the categorisation generic as mentioned in the introduction section - Object and Material Identification, Region of Interest Identification, and Texture Characteristics Identification. This allows us to flexibly deploy various texture properties in understanding objects, ROIs and micro-patterns recognition systems.

2.1. Object & Material Identification (Classification)

Texture is a crucial feature, often combined with color and shape, for distinguishing and recognizing objects.

The technical process involves:

Image preprocessing - read image and convert to grayscale —>

Feature Extraction (Gabor, GLCM, wavelet, or LBP) —> apply one or more descriptors to extract texture features from the image —>

Feature Vector Creation - combine the extracted features into a single combined feature vector for each pixel or region —>

Train for Clustering/Classification - the dataset of feature vectors and corresponding labels (object/material type) is used to train a machine learning classifier (e.g., SVM, Random Forest, k-NN) for identification —>

Deploy Classifier - use/deploy trained classifier to predict objects and materials in new images

Material Identification: Determining the type of material (e.g., wood, metal, fabric, stone) based on its surface characteristics.

In medical image analysis, classifying tissue types (e.g., healthy vs. diseased tissue in medical scans) or identifying specific cellular structures based on their texture.

In surface defect detection, identifying the presence and type of defects on a surface by analyzing textural anomalies.

Object Recognition: Classifying objects by their distinctive textures (e.g., identifying different types of plants, textiles, or food items).

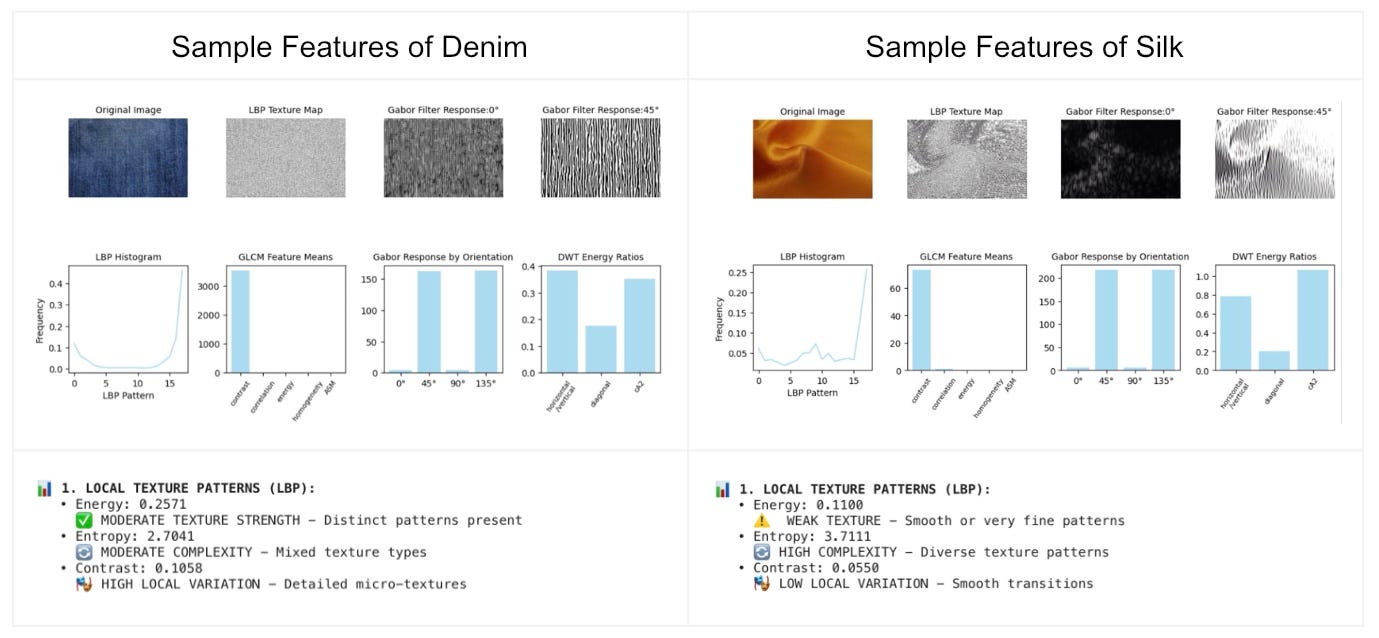

Illustration 3 - Sample Texture Analysis for various types of Fabrics

2.2. Region of Interest Identification (Segmentation)

Texture analysis is extensively used to define and characterize specific areas of interest within an image, particularly in specialized fields like medical imaging or manufacturing.

The technical process involves:

Image preprocessing - read image and convert to grayscale, optionally, apply noise reduction with a Gaussian filter —>

Feature extraction (Gabor, GLCM, Wavelet, LBP) —> apply one or more descriptors to extract texture features from the image —>

Feature Vector Creation - combine the extracted features into a single combined feature vector for each pixel or region —>

Train for Classification - the dataset of feature vectors and corresponding labels (ROI/non-ROI) is used to train a machine learning classifier (e.g., SVM) that can distinguish between ROI and non-ROI areas based on the texture features —>

Deploy for Segmentation - apply the trained model to the image to classify each pixel as belonging to the ROI or the background, effectively segmenting the image —>

Refinement - use post-processing techniques, such as morphological operations (dilation and erosion), to clean up the segmented ROI.

Texture Segmentation: Dividing an image into regions with distinct textural properties

separating foreground from background,

segmenting different land cover types in satellite imagery. The algorithm decides “forest” vs. “grassland” vs. “urban” based on the statistical properties of the pixel patterns (e.g., roughness, regularity, and directionality).

Anomaly Detection: Isolating areas with unusual or different textures compared to the surrounding regions.

2.3. Texture Characteristics Identification (Texture Analysis and Interpretation)

The technical process involves:

Image preprocessing - read image and convert to grayscale —>

Feature extraction (Gabor, GLCM, Wavelet, LBP) —> apply one or more descriptors to extract texture features from the image —>

Feature Vector Creation - combine the extracted features into a single combined feature vector for each pixel or region —>

Train for Classification - use a classifier such as a Support Vector Machine (SVM), to train a model that can distinguish texture characteristics —>

Deploy Classifier - use/deploy trained texture classifier to predict textural class of new images

Texture Characterization: Quantifying properties like coarseness, directionality, regularity, and randomness of a texture.

In quality control, assessing the quality or uniformity of a manufactured product based on its surface texture.

Pattern Analysis: Identifying recurring patterns or structures within a texture.

In environmental monitoring, analyzing changes in surface textures over time, such as in land use or vegetation health.

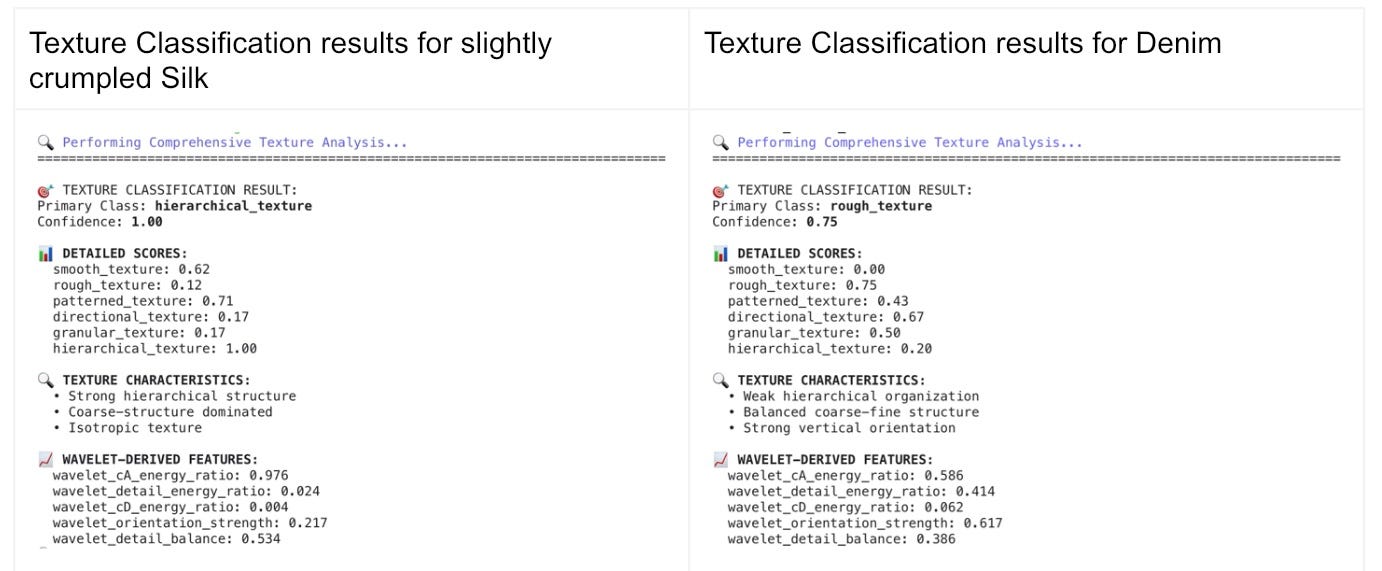

Illustration 4 - Sample Texture Classification outputs for various Fabric

3.0. Complement Role for Shape Defined Objects

While texture dominates material identification, objects defined primarily by geometric shape (e.g., stop signs, keys) rely more on edge and contour analysis. However, texture still provides valuable confirming evidence about material properties.

4.0. Transition to Implementation

Various types of <<texture descriptors>> can be used to extract features to create “fingerprint” or “signature” for a material. These features are used to train machine learning models (like a Support Vector Machine or Random Forest) to recognize the fingerprints.

These theoretical advantages become concrete when we examine how specific texture descriptors tackle real world “what” problems:

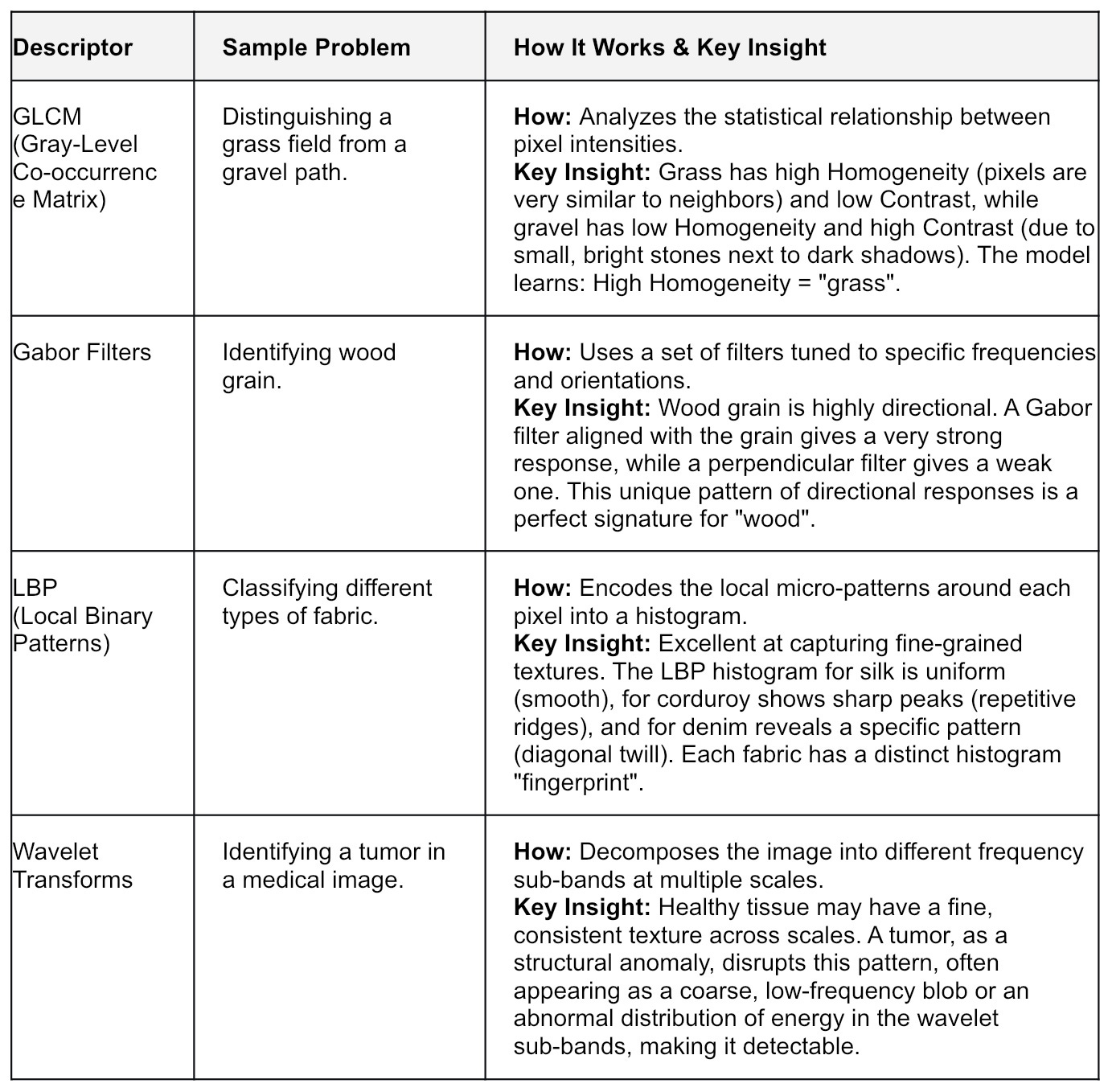

Illustration 4: Table summarising how Texture Descriptors aid in the “what” Question.

The Bottom Line: For material classification and non-rigid object identification, texture often provides the most discriminative features, sometimes making shape analysis secondary or even unnecessary.

5.0. Conclusion

A good practice when working with images is to first understand its full potential to the business. Before diving into texture descriptors and classification models, pause and ask the fundamental business questions:

Strategic Foundation:

What steady streams of image data does our business already capture?

What hidden insights lie within our existing image repositories?

How could image-derived intelligence complement our current business knowledge?

What processes, customer experiences, or decisions could be transformed by understanding image content better?

Execution Reality:

You may not get clear answers to all questions immediately—and that’s okay.

Use AI to help explore and shape these insights rapidly. This is what we call AI-native thinking: building with AI as a co-pilot from day one.

While this strategic questioning may feel “non-technical,” it’s the foundation for:

Purposeful innovation that solves real business problems

Optimal resource allocation that avoids wasted experimentation

Strategic pilots that create genuine business differentiators

This mindset doesn’t just build better models—it saves millions while creating competitive advantages that matter.

Don’t forget to have fun while you are at it 🌈

References : 🎁

Playground Code: <<on Colab>>

How “Texture” affects the “Where”in Images: <<access blog>>

How “Texture” affects the “When”in Images: <<access blog>>

Color Science: <<access Express Guide>>

About our Process: <<access process>>

Thanks for writing this, it clarifies a lot. I've been thinking about this a lot in my own teaching. You mention various descriptors like LBP and Wavelet Transforms. For real-worl applications, especially in complex scenarios with mixed textures, how do you approach selecting the optimal conbination of these features for robust object identification?