How “Texture” affects the “Where” (Identification) in Images

Texture Segmentation and Boundary Detection

Texture Segmentation and Boundary Detection

Texture drives the engine of image segmentation. The ‘where’ question fundamentally answers boundaries, revealing where one material ends and another begins through their distinct textural signatures.

In this blog we discuss:

texture analysis and features to address categories of “where” problems including the high level technical process and key application areas

key considerations when using texture features in spatial analysis

transition to implementation aspects using texture descriptors such as LBP, Gabor, GLCM, and Wavelet Transforms

We further complement the discussion with our hands-on playground code (in Python, OpenCV, scikit-learn) for a Texture Analysis Kit (TAK) on Google Colab, to aid those wishing to start experimenting quickly.

2.0. Categories of “Where” Challenges

Tasks such as texture segmentation and boundary detection in images are assisted by various quantifiable textural properties (statistical, local, structural, transforms), or features (LBP, Gabor, GLCM, Wavelets) derived from the spatial arrangement and variation of pixel intensities. These features allow algorithms to distinguish between different textured regions and locate their boundaries. Used individually or combined, the features provide the quantitative data needed by algorithms to partition an image into meaningful regions based on their texture content.

2.1. Texture Segmentation (Finding Regions - The “where” of homogeneous areas)

The technical process involves:

Image preprocessing - read image and convert to grayscale —>

Feature Extraction (Gabor, GLCM, wavelet, or LBP) —> apply one or more descriptors to extract texture features from the image —>

Feature Vector Creation - combine the extracted features into a single combined feature vector for each pixel or region —>

Train for Clustering/Classification - use a clustering algorithm (e.g., K-Means, Mean Shift) or a classifier (e.g., SVM, Random Forest) to group pixels/regions with similar texture features —>

Deploy Classifier - Deploy the trained classifier —>

Segmentation Map Generation - Assign a label to each pixel/region based on its cluster or classification result, creating a segmented image.

In Object Detection, finding skin regions in cluttered images using LBP’s consistent micro-texture. Or finding a gravel path running through a grassy lawn where color might be similar (brownish-green grass, brownish-gray gravel), but the texture is completely different. The path is a noisy, high-frequency texture, while the grass is a softer, more vertically oriented texture. This difference defines the “where.”

In Material Zoning, segmenting urban vs. forest areas in satellite imagery using statistical texture properties.

In Surface Analysis, identifying defect free vs. defective regions in manufacturing inspection.

2.2. Boundary Detection (Finding Edges - The “where” of transitions between regions)

The technical process involves:

Image preprocessing - read image and convert to grayscale —>

Feature Extraction (Gabor, GLCM, wavelet, or LBP) —> apply one or more descriptors to extract texture features from the image —>

Feature Vector Creation - combine the extracted features into a single combined feature vector for each pixel or region —>

Edge/Boundary Detection - analyze the extracted feature maps to identify regions of significant change, which indicate boundaries.This can involve:

Thresholding - apply threshold to feature map to highlight strong responses.

Gradient based methods - calculate gradients of the feature maps to find areas of rapid change.

Machine Learning - train a classifier to distinguish between boundary and non-boundary regions based on the extracted features.

In Natural Boundaries, detecting coastline between sandy beach (low entropy) and ocean waves (high entropy).

In Structural Boundaries, finding edges between brick wall (oriented patterns) and sky (uniform texture).

In Material Transitions, segmenting road (smooth) from forest (complex high-frequency texture).

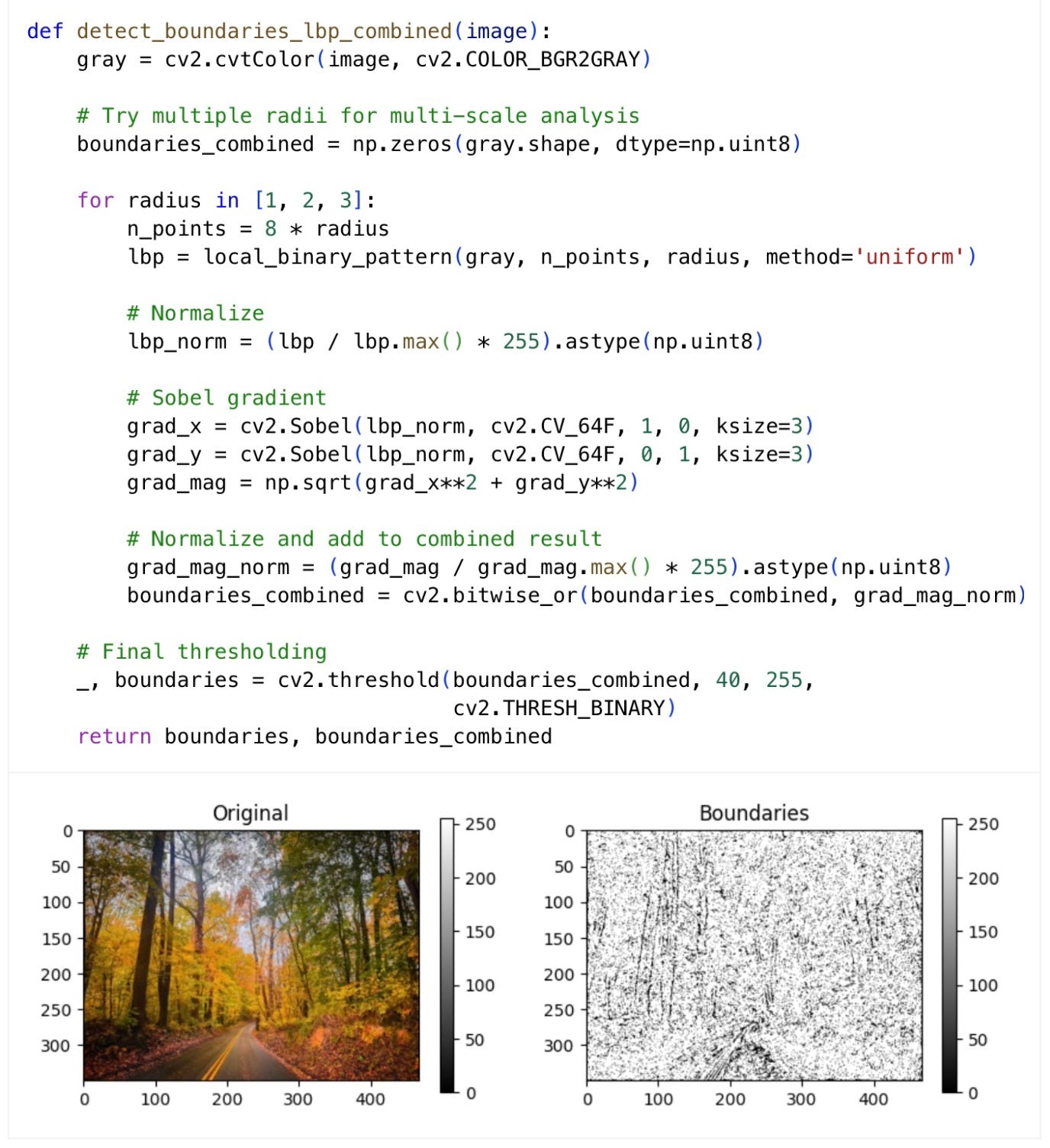

Illustration 1 - Using LBP Texture Features to ‘Find the Road in the Forest’ - a Boundary Detection Example

3.0. Important Considerations for Spatial Analysis

While powerful for segmentation, texture based localization works best when complemented by other cues. For instance, strong shadows can alter local brightness and contrast, potentially creating false texture boundaries. A robust system often combines texture with color consistency and illumination-invariant features for accurate ‘where’ detection.

3.1. Illumination-invariant Features

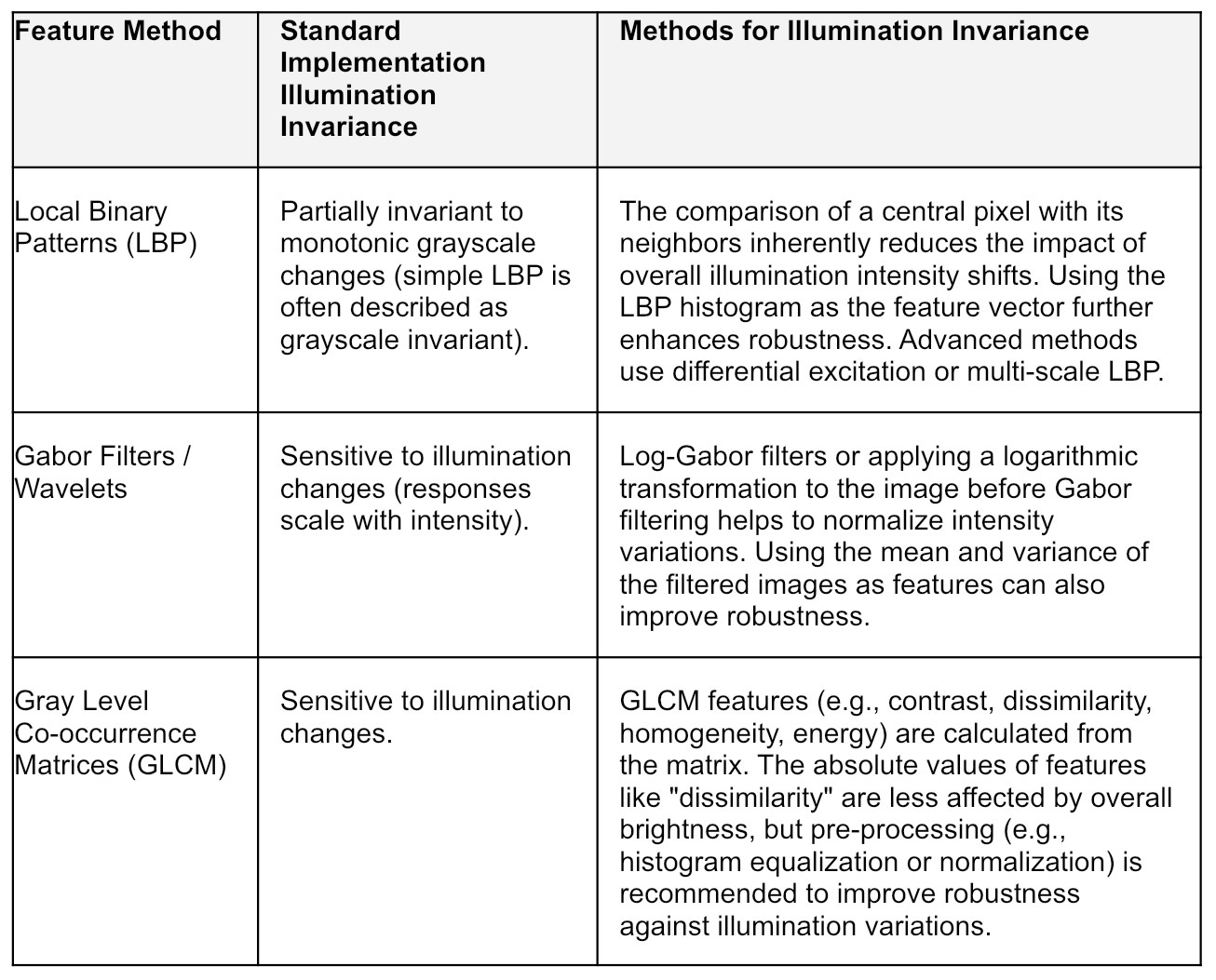

Illumination-invariant features in texture analysis can be achieved by using methods specifically designed to handle lighting variations or by applying a pre-processing step, such as logarithmic transformation or grayscale normalization before extracting features. The standard implementations of Gabor, LBP, wavelet, and GLCM are generally sensitive to illumination changes, but variations exist to address this, as described in the table below.

Illustration 2 - Illumination-Invariant Techniques

Alternatively you can explore other advanced features and descriptors like Scale-Invariant Feature Transform (SIFT) or Oriented FAST and Rotated BRIEF (ORB) for illumination invariance.

4.0. Transition to Implementation

These theoretical advantages become concrete when we examine how specific texture descriptors tackle real spatial localization problems:

Illustration 3: Table summarising how Texture Descriptors aid in the “where” Question.

The Bottom Line: For the “where” question, texture is critically important for partitioning an image into meaningful regions, especially when color is unreliable.

5.0. Conclusion

When it comes to the “where” question - there’s no one-size-fits-all approach, because different applications have different requirements. For instance, in medical imaging, precision and accuracy is valued; while in industrial inspection, robustness to specific defect types takes precedence.

Methods can vary from basic Computer Vision (Canny, Active Contours, etc) to ML and advanced Deep Learning (e.g. U-Net, DeepLab v3+). Feature Descriptors for Texture/Pattern boundaries are also evolving into various LBP variants (Multi-scale LBP), Deep Texture Descriptors (CNN features from texture datasets), SIFT and HOG (when shape boundaries are important).

Surely it’s not in a day’s work, but keeping pace is key for both adoption of ready solutions, upgrading current toolings and developing new ones.

Don’t forget to have fun while you are at it 🌈

References : 🎁

Playground Code: <<on Colab>>

How “Texture” affects the “What”in Images: <<access blog>>

How “Texture” affects the “When”in Images: <<access blog>>

Color Science: <<access Express Guide>>

About our Process: <<access process>>